HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

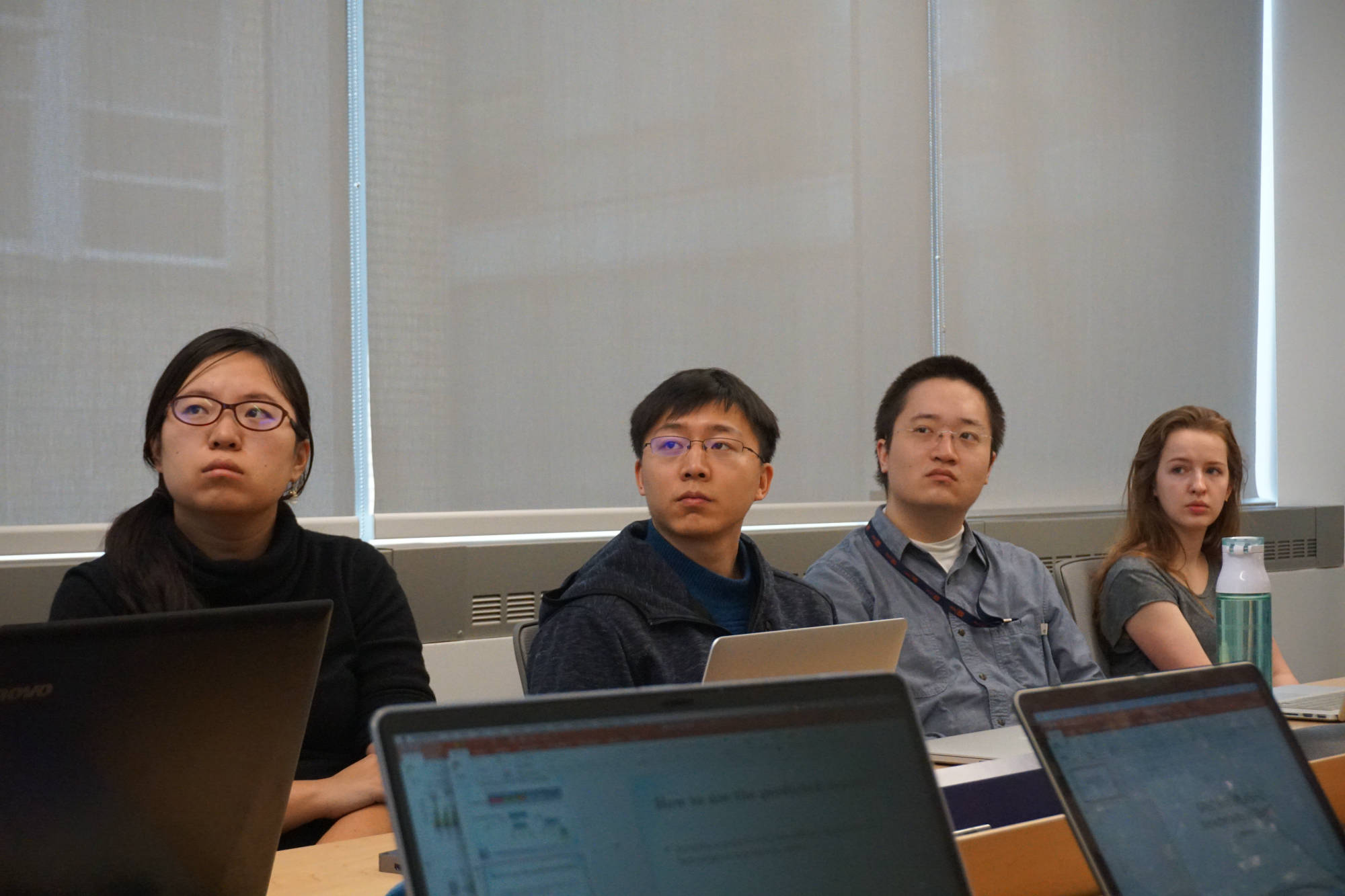

We are looking for new post-doctoral research fellows and Ph.D. students!

Looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

Looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

Looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

Looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

Looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!

HIGH PERFORMANCE COMPUTING ARCHITECTURES & TECHNOLOGIES LAB

We are looking for new post-doctoral research fellows and Ph.D. students!